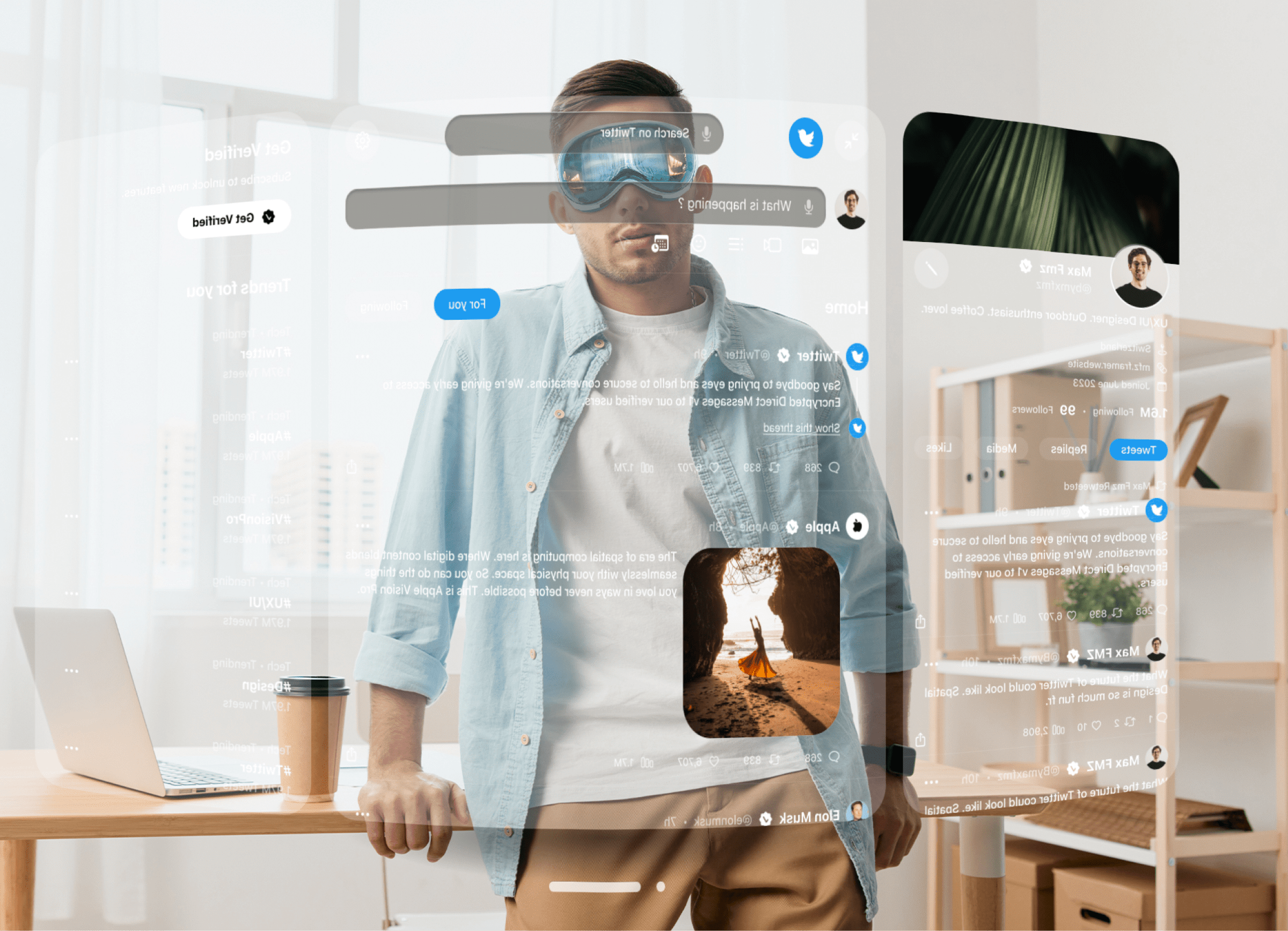

We offer comprehensive support to our clients throughout the entire product development journey, from conceptualization to execution. Recognizing your keen interest in developing products for Apple Vision Pro, we’ve consolidated the expertise of our team into a single article. This article serves as a step-by-step guide on crafting a product tailored for Apple Vision Pro, ensuring that you navigate the process seamlessly and effectively.

Create a Concept

The first thing you need to do is come up with a concept for your app. Think of this as the blueprint that will guide the entire development process. This stage involves:

- Idea Generation: Coming up with potential app ideas based on market needs, user preferences, or solving specific problems.

- Market Research: Analyzing the market to understand existing solutions, competitors, target audience, and potential gaps or opportunities.

- Defining Objectives: Clearly defining the goals and objectives of the app. This includes identifying the problem it aims to solve, the target audience, and the desired outcomes.

- Conceptualization: Translating the initial idea into a concrete concept by outlining core features, user interface design, user experience flow, and technical requirements.

- Prototyping: Creating wireframes or prototypes to visualize the app’s user interface and interactions. This helps in refining the concept and gathering feedback from stakeholders.

- Feasibility Analysis: Assessing the technical feasibility, resource requirements, and potential challenges associated with developing the app.

- Validation: Testing the concept with potential users or stakeholders to validate its viability and gather feedback for further refinement.

Overall, creating a concept sets the foundation for the app development process, guiding subsequent stages such as design, development, testing, and deployment. It helps ensure that the final product meets user needs, aligns with business objectives, and stands out in the competitive app market.

Market Research

The next step in developing a product for Apple Vision Pro involves conducting thorough market research. This crucial step provides insights into the competitive landscape, user preferences, and emerging trends, which are vital for shaping your product strategy and positioning. To perform effective market research:

- Identify Your Target Audience: Define the demographics, preferences, and behaviors of your target users. Understand their needs, pain points, and expectations regarding immersive experiences offered by Apple Vision Pro.

- Analyze Competitors: Study existing apps and solutions within the Apple Vision Pro ecosystem. Assess their features, user experience, pricing models, strengths, and weaknesses. Identify gaps or areas where you can differentiate your product.

- Explore Market Trends: Stay updated on industry trends, technological advancements, and consumer preferences related to augmented reality (AR) and virtual reality (VR) experiences. Identify emerging opportunities or niche markets that align with your product concept.

- Gather User Feedback: Engage with potential users through surveys, interviews, or focus groups to gather feedback on their preferences, pain points, and expectations regarding AR/VR applications. Incorporate this feedback into your product development process to ensure relevance and user satisfaction.

- Evaluate Technical Feasibility: Assess the technical requirements, limitations, and capabilities of Apple Vision Pro. Understand the tools, frameworks, and APIs available for developing immersive experiences on the platform. Determine the feasibility of implementing your desired features and functionalities within the constraints of the platform.

By performing comprehensive market research, you gain valuable insights that inform your product strategy, enhance user experience, and increase the likelihood of success in the competitive Apple Vision Pro marketplace.

Choose Your Apple Vision Pro Features

After conducting market research, the next crucial stage in developing a product for Apple Vision Pro is selecting the features that will define your app’s functionality and user experience. Here’s a breakdown of key features to consider:

- Eye-tracking: Leveraging Apple Vision Pro’s advanced eye-tracking technology, you can create immersive experiences that respond to users’ gaze, enabling more intuitive interaction and engagement within the app.

- High-quality 3D content: Incorporate high-fidelity 3D models, animations, and environments to deliver visually stunning and immersive experiences that captivate users and enhance their engagement with the app.

- Live video streaming capabilities: Enable real-time video streaming within the app, allowing users to share live experiences, events, or demonstrations with others, fostering collaboration and social interaction in virtual environments.

- MR/VR-based calls and text messaging: Integrate augmented reality (AR) and virtual reality (VR) communication features, such as AR/VR-based calls and text messaging, to facilitate seamless communication and collaboration between users within immersive environments.

- Real-world sensing and navigation: Utilize Apple Vision Pro’s real-world sensing and navigation capabilities to enable location-based experiences, indoor navigation, and context-aware interactions within the app, enhancing usability and relevance for users in various environments.

- Support for third-party applications: Enhance the versatility and functionality of your app by providing support for third-party applications and services, allowing users to seamlessly integrate external tools, content, or functionalities into their immersive experiences.

By carefully selecting and integrating these Apple Vision Pro features into your app, you can create a compelling and differentiated product that delivers immersive, engaging, and valuable experiences to users, driving adoption and satisfaction in the competitive AR/VR market.

Determine Your App Development Stack

Once you’ve identified the features for your Apple Vision Pro app, the next step is to determine your app development stack. This involves selecting the tools, frameworks, and technologies that will enable you to bring your concept to life efficiently and effectively. Here’s how to approach this stage:

Evaluate SwiftUI, ARKit, and RealityKit

- SwiftUI: Consider using SwiftUI for building the user interface (UI) of your app. It offers a modern and declarative approach to UI development, simplifying the process of creating dynamic and responsive interfaces for your immersive experiences.

- ARKit and RealityKit: For AR and VR functionalities, leverage Apple’s ARKit and RealityKit frameworks. ARKit provides powerful tools for building immersive AR experiences, while RealityKit simplifies the creation of 3D content and interactions within your app.

Choose Xcode as Your IDE

As the official integrated development environment (IDE) for Apple platforms, Xcode is the go-to choice for building apps for iOS, macOS, watchOS, and tvOS. Utilize Xcode’s robust set of tools, including its intuitive interface builder, debugging capabilities, and integrated performance analysis, to streamline your app development process.

Consider Additional Tools and Libraries

Explore other tools, libraries, and resources that complement SwiftUI, ARKit, and RealityKit, such as:

- SceneKit: If your app requires advanced 3D graphics and animations, consider incorporating SceneKit, Apple’s framework for rendering 3D scenes and effects.

- CoreML: Integrate CoreML, Apple’s machine learning framework, to add intelligent features and capabilities to your app, such as object recognition or predictive modeling.

- Firebase: Utilize Firebase for backend services, authentication, and cloud storage, enabling seamless integration of cloud-based functionality into your app.

By carefully determining your app development stack and leveraging technologies such as SwiftUI, ARKit, RealityKit, and Xcode, you can build a powerful and immersive Apple Vision Pro app that delivers engaging and captivating experiences to users!

Work With an App Development Company

When selecting an app development company, it’s crucial to prioritize experience and expertise in AR/VR/MR technologies. We have more than 14 years of experience with augmented reality, virtual reality, and mixed reality application development, so you can be sure that your Apple Vision Pro project is in capable hands!

Our team boasts a proven track record of successfully delivering complex projects, with skilled developers, designers, and engineers proficient in specialized technologies and platforms such as ARKit, RealityKit, Unity, and Unreal Engine. By partnering with us, you can leverage our technical expertise, innovation, and commitment to delivering high-quality immersive experiences to ensure the success of your Apple Vision Pro app!

Develop and Submit the App

The final step in bringing your Apple Vision Pro app to life is the development and submission process. Here’s how to approach this crucial stage:

Development Phase

Work closely with our experienced team of developers, designers, and engineers to translate your concept into a fully functional app. Throughout the development process, we’ll provide regular progress updates and opportunities for feedback to ensure that the app aligns with your vision and objectives.

Testing and Quality Assurance

Prior to submission, our team conducts rigorous testing and quality assurance processes to identify and address any bugs, glitches, or usability issues. We’ll ensure that your app functions seamlessly across different devices and environments, providing users with a smooth and immersive experience.

Submission to the App Store

Once the app is thoroughly tested and refined, we’ll assist you in preparing and submitting it to the Apple App Store for review and approval. Our team will ensure that all necessary documentation, assets, and compliance requirements are met to expedite the submission process.

Collect Feedback and Iterate

After the app is launched, it’s essential to collect feedback from your audience to gain insights into their experience and preferences. Based on this feedback, we’ll work collaboratively to iterate and improve the app, addressing any issues, adding new features, or enhancing existing functionalities to ensure continuous optimization and alignment with user needs and market trends.

By partnering with us for the development and submission of your Apple Vision Pro app, you can trust that we’ll guide you through each step of the process with expertise, transparency, and dedication to delivering a successful and impactful product!